Artificial Intelligence as a Theatre Director

school of x in connection with xCoAx

class of 2023

Abstract

Despite numerous applications in live performance, Artificial Intelligence (AI) seems to be employed as a passive element, not deciding nor actively shaping what happens on stage. I explore the possibility of AI as an active element, able to direct performance. To push this scenario to its limits, I suggest implementing AI in the “eterodirezione” (hetero-direction) theatre device, where actors are given their part through in-ear monitors. I discuss the two possible outcomes of the implementation, “asynchronous direction” and “synchronic direction”, and I focus on the latter, where AI composes dramaturgy and instructs the actors in real time. I finally discuss the major outcomes of this new device, concerning the nature of the performance, the human-machine interface and the role of the director and actors. AI and actors prompt each other, improvising in a prompting loop, where they both become co-directors and co-performers of the performance, whereas the human director acts as a demiurge-like figure. This contribution is thought of as the first theoretical framework in the process of creating a new theatre language where AI is an active element of performance.

Keywords

Artificial Intelligence, Theatre, Hetero-Direction, Synchronic Direction, Prompting Loop

Intro

Automation and machinery have long fascinated theatre-makers and found extended applications. In Stifter Dinge1 (2007), Heinrich Goebbel realised a fully automated live music performance staging complex machinery, which led scholars to speak of “Robot Opera” (Sigman 2019). In 2020, the Copernicus Science Centre in Warsaw opened the first “Robotic Theatre” in the world2.

Similarly, AI has drawn the attention of performance artists. In February 2021, “the first computer-generated theatre play” was staged as part of the THEaiTER project3 (Rosa 2020). Numerous other examples of applications have already appeared, where employed databases are either pre-existing, specifically designed for the performance or generated by discretisation of scenic elements. Among these are Corpus Nil4 (2016) by Marco Donnarumma, and Discrete Figures5 (2018) by Elevenplay, Rhizomatiks Research and Kyle McDonald. In the former, AI synthetises sonic elements after elaborating signals collected via microphones and electrodes installed on the performer’s body. In the latter, human performers interact onstage with pre-rendered 3D figures whose movements are generated by AI after the elaboration of a video database of human performers’ dance sequences (Befera and Bioglio 2022). In her interactive meta-drama AI6 (2021), Jennifer Tang stages over multiple evenings the creation of a play through GPT-3 (Akbar 2021).

In the above examples, AI seems to be employed as a mere tool, namely as a pre-scenic instrument to compose a dramaturgy or a movement sequence (as in the THEaiTRE project, AI, and Discrete Figures) or as an on-stage complement translating into sound, light or scenography (as in Corpus Nil). AI outputs are therefore either controlled and preventively collocated in the global dramaturgy or allowed to have an impact on the performance in real-time within pre-set limits. This is consistent with the ongoing debate on Generative Art, tending to refute AI autonomy in the creative process (Hertzmann 2018). The concept of “Meaningful Human Control” (MHC) applied to Generative Art (Akten 2021) strengthens this view, configuring “generative AI as a tool to support human creators”, who are the only agents accountable for the creative output (Epstein 2023).

Nevertheless, I argue that in Generative Art, AI seems to exhibit a certain degree of autonomy. After an initial prompt by the human user, the AI software is responsible for the image synthesis: what happens “inside the machine” cannot be monitored and the output cannot be foreseen. AI exhibits active features. In theatre, no examples have yet emerged where AI is employed as an active element shaping, creating, and ultimately directing the theatre performance. The above-mentioned projects show in fact that AI can contribute with a certain degree of agency and unpredictability, for example synthesising sonic elements, but cannot fully decide what happens onstage. A notable exception is represented by the Improbotics experiment7, an improvising device where AI provides lines to the actors. However, the presence of “free-will improvisers” not receiving lines from AI, and the restriction of AI intervention to textual dramaturgy eventually limit in my view its autonomy on stage.

In this essay, I address the question of whether and to what extent AI can have an active role in theatre performance, in the sense outlined above. To answer this question, a way of introducing AI in theatre performances needs to be found, that results in AI shaping the performance and maximizing its agency. I identify an already existing performance device to be implemented with AI and discuss the main consequences arising from this operation. My contribution aims to serve as a theoretical attempt to framework AI implementation in theatre and to shed light on some interesting aspects: concerning AI research, it helps to clarify the role of prompts and embodiment in the human-machine interface; from a theatrical standpoint, it helps to reaffirm the role of the human in a world progressively centred on virtuality. Ultimately, this essay is intended as a “declaration of intent” towards the creation of a new language in the performing arts, with further theoretical and practical studies intended as the natural follow-up.

Hetero-direction

I suggest as a base of exploration the method of “eterodirezione” (“hetero-direction”) invented and developed by the Italian experimental company Fanny&Alexander. Hetero-direction consists “in having the performer receive the directions for his or her part in the scene through an earpiece” (Di Bari 2021). The performer plays “lines and actions ‘administered’ by the directing division” without having to learn them by heart and without knowing the exact order of their part. This results in preventing “the routine and repetitiveness” inbuilt into the theatrical act (Margiotta 2020, my translation).

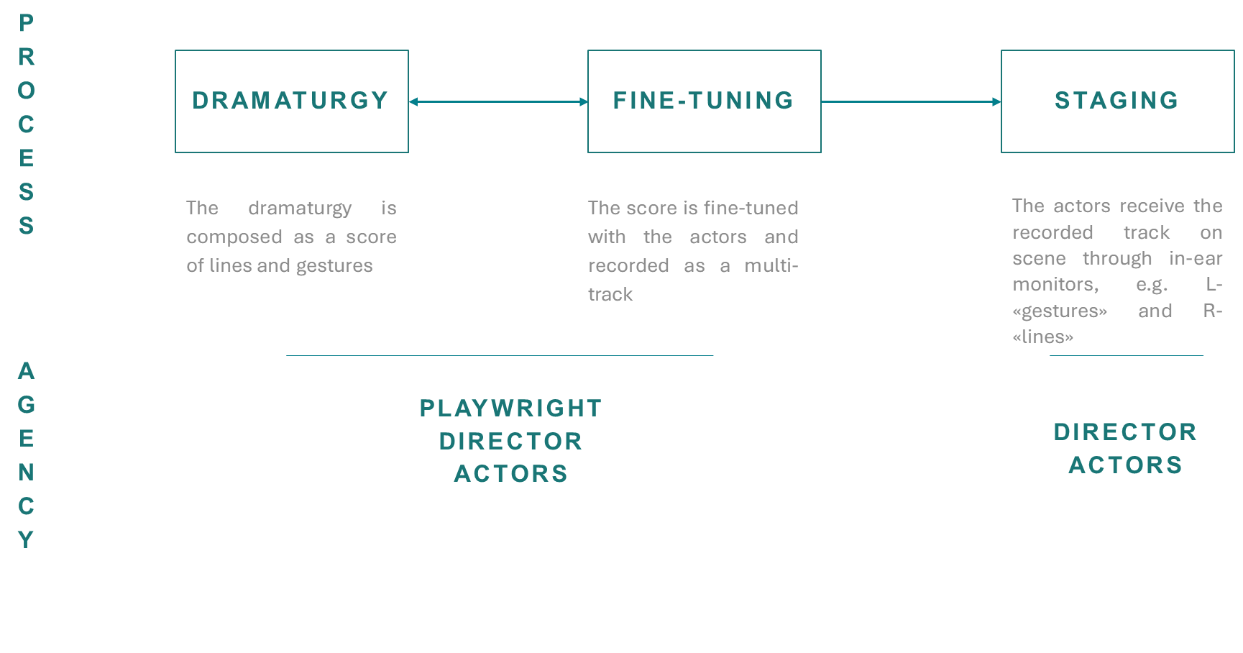

A closer look at the method provides useful insights into the possibility of AI implementation. I identify three phases of the creative process, summarised in the scheme of Figure 1, which will serve as a reference for the next sections. As it is common in theatre practice, “dramaturgy” can hereby refer to a broad variety of performance components, ranging from text to music, light, and space design. To point out the distinctive features of hetero-direction, I will henceforth limit myself to the dramaturgy as text and as a sequence of movements or physical actions.

In the first step, the dramaturgy is composed by the playwright and/or director and/or actors as a textual and/or physical score. The latter usually takes the form of a detailed (textual) list of precisely (physically) coded gestures, with a correspondent identifier name, which the actors will memorize as a physical “vocabulary”.

The score is then rehearsed and refined with the interpreters.

During the live performance, staged by the director, the set of instructions is transmitted to the actors through earphones, either through live dictation or as a pre-recorded track, e.g. with instructions for gestures recorded on the L-channel and instruction for lines on the R-channel (Margiotta 2020).

In my view, introducing AI may result in further developments of the device and in AI exhibiting an active role in live performance. I argue that AI can be introduced in hetero-direction in two ways:

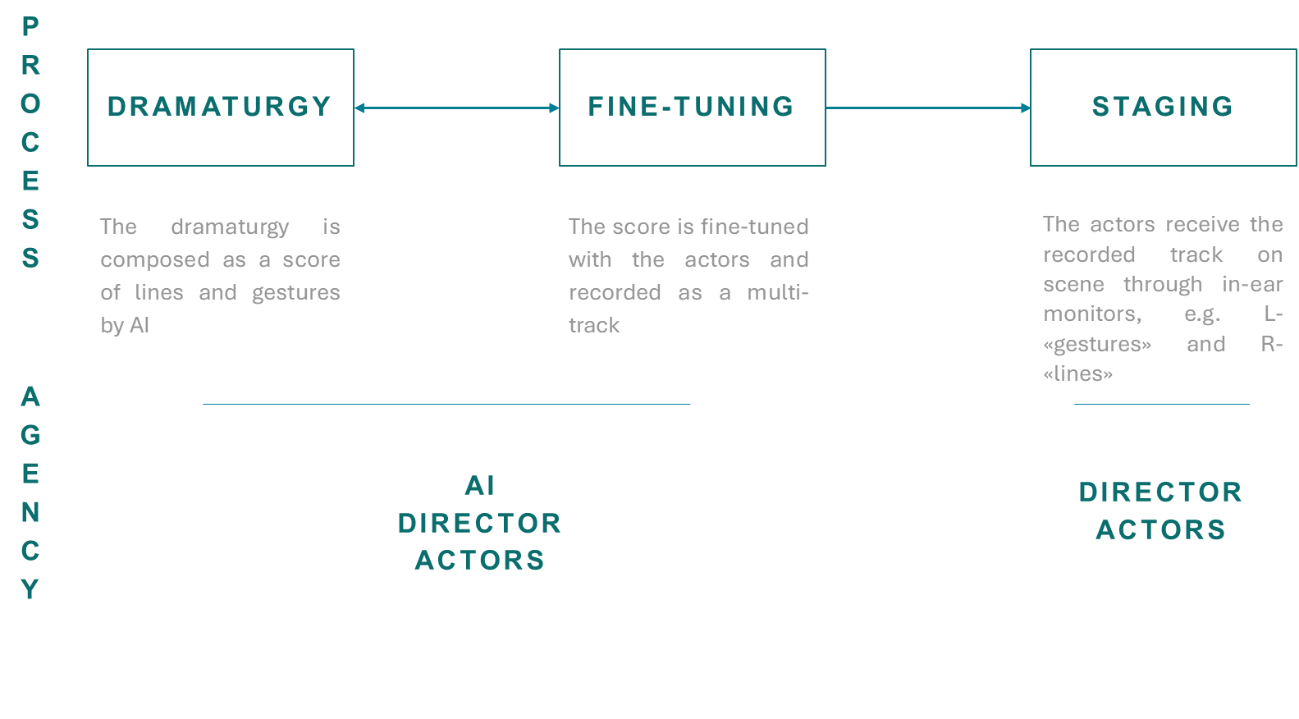

In asynchronous direction, AI takes the function of the playwright in Figure 1: the whole dramaturgy is partially or totally written beforehand by AI and subsequently transmitted to the actors through real-time dictation or as a pre-recorded track. AI is employed as a mere tool and stage direction falls within human competence.

In synchronic direction, AI creates the dramaturgy during the live performance, reacting to what happens onstage and giving the actors real-time instructions. In this scenario, AI is present at all stages of Figure 1 and eventually directs the performance.

Asynchronous and Synchronic Direction

In asynchronous direction (see Figure 2), the hetero-direction scheme outlined in the previous section undergoes no relevant changes: the dramaturgy is composed in the first step, rehearsed, and fine-tuned by the director and actors and eventually transmitted onstage to the interpreters. The only difference consists in the implementation of AI in the first step. As we have seen in Section 1, both the composition of a play and of sequences of movements by AI have been proven possible. Nevertheless, in asynchronous direction, the result of AI implementation is analogue to that encountered in THEaiTRE or in Discrete Figures: AI is employed as a tool and does not actively direct the performance, which is ultimately staged by the human directing division. Moreover, it is likely the AI component would not easily be noticeable to the audience since the staging is entirely human-driven. For these reasons, I consider this option less interesting and will focus on the second one.

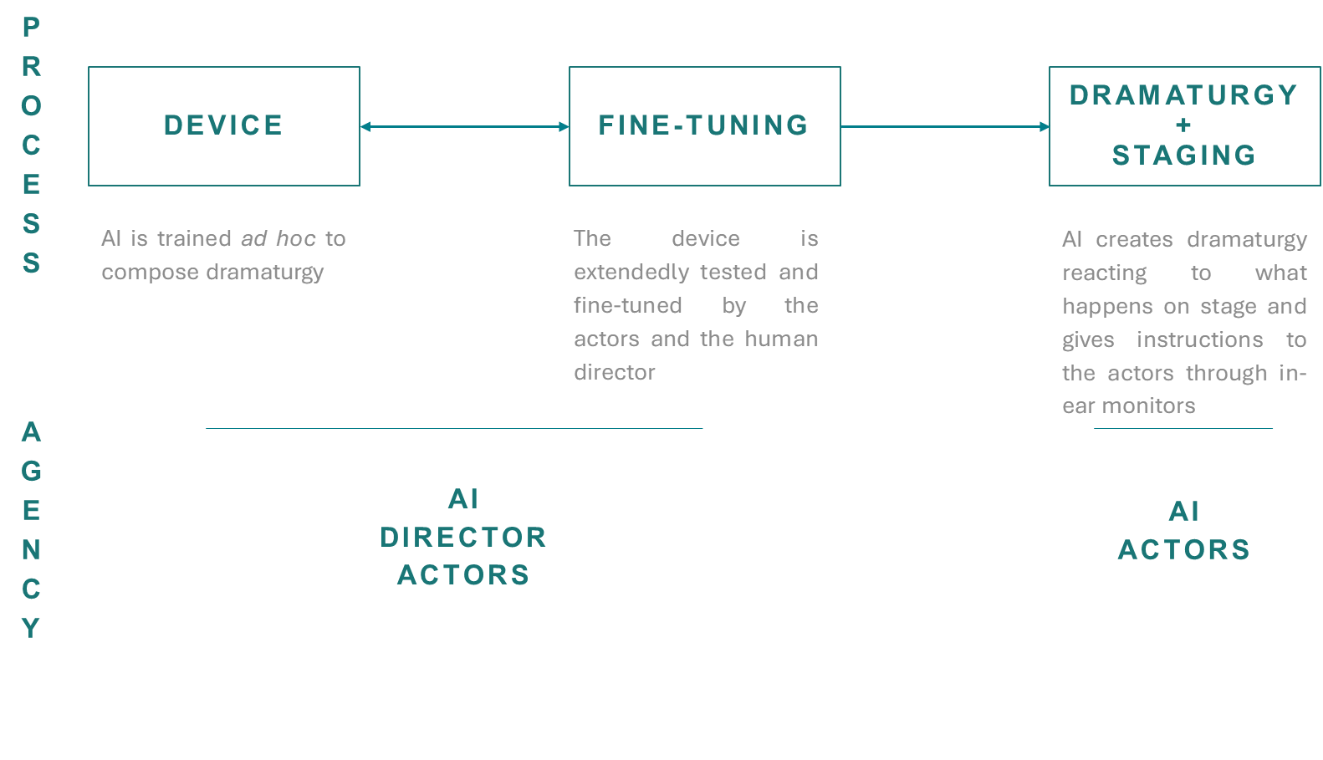

Since the dramaturgy is composed by AI onstage, in synchronic direction (see Figure 3) the original scheme is drastically changed, although the three moments of design, tuning and staging are preserved:

In the first step, the device needs to be designed and set into place. This means programming and training an AI model to detect and react to all the scenic variables while composing new dramaturgy and instructing the actors. A whole technological apparatus needs to be put in place, allowing AI to perceive what is happening onstage, for example through a scene-mapping mechanism made up of microphones, cameras, and sensors. AI could therefore recognize which positions are occupied onstage and react with an “if…then” logic, such as: if actor 1 is in A, then actor 1 goes to C and says “potato”.

In the second step, the functioning of the whole device is tested and fine-tuned by the director and through interaction with actors. Given the complexity of the apparatus, steps (i) and (ii) are likely to be performed in a loop every time a major difficulty arises during rehearsals, until the device is stable and does not jam.

The third step consists of the final performance. Here, staging and dramaturgy creation overlap. AI composes the dramaturgy and gives instructions to the actors, which perform them, providing in turn material for the AI to react.

The uniqueness of this scenario is evident: the AI is not anymore employed as a tool or a complement but is actively shaping the live performance, to the extent that it could be argued to be the actual director. This scenario entails several consequences on both a theoretical and practical level, some of which I am addressing in the next sections. I will focus on the nature of a performance realised through synchronic direction, the role of the human component, prompts and creativity.

Prompting Loops, Co-workers, and Demiurges

Fabian Offert points out two interesting commonalities between theatre and machine learning (ML) (Offert 2019). On the one hand, they both “focus on discrete state transformations”, i.e. the transitioning between fixed states (be they machine states or those resulting from the composition of scenic elements) inside a “black-box assemblage” corresponding to the mise-en-scene in theatre and to the machine set-up in ML. On the other hand, they must both deal with an external singularity element they need to make sense of. Some theatre states are in fact “probabilistic”, insofar as they are affected by an external influence, such as improvisation or the presence of the audience. ML must instead extract “probability distributions from […] real-world data”. Accordingly, they are both connecting their “black-box” assemblage with the outside world, to “make sense” of it. Quoting Offert:

What theater and machine learning have in common is the setting up of an elaborate, controlled apparatus for making sense of everything that is outside of this apparatus: real life in the case of theater, real life data in the case of machine learning.” (Offert 2019, his italics).

In synchronic direction, not only are theatre and ML exhibiting this common feature, but they are also connected in a loop. This is clear when considering the nature and role of the prompt in this device. In Generative AI models, prompts usually consist of text lines which trigger a cascade of untraceable AI processes and result in a textual or image output. In our case, instead, the prompt is a physical component that is captured and quantised by the technological apparatus: the actors’ blood pressure or voice, a position in the space, etc. In a word, the body is here the prompt. The process, though, does not end here. AI elaborates the input, synthesises new elements of dramaturgy and instructs, i.e. prompts, in turn, the actors. Synchronic direction works therefore as a prompting loop.

In such a system, what is the singularity element pointed out by Offert? I argue that theatre and ML constitute the singularity source of one another. For theatre (the actors), singularity comes from the untraceable processes of AI and the unpredictability of its output. For ML, singularity comes from theatre and from all the external elements that can influence a theatre performance. This is where the role of the actor becomes clear. One could argue that synchronic direction flattens human creativity and reduces the actor to a “mere tool”, a puppet manipulated by AI. On the contrary: the actors bring into the device all the unpredictability inherent to their being human. As every dancer moves in the same choreography in a personal way, interpreting the pre-established sequence of movements through their own peculiar sensibility and body, so in synchronic direction will the actors perform AI instructions according to their own individuality. Moreover, their creativity will be solicited by the unpredictability of AI outputs. The same AI prompt results in different human outputs depending on who is performing it: different reactions will result in different quantised signals, triggering AI in different ways. As a result, human creativity is preserved, if not enhanced, and actors and AI are co-improvising, co-directing, and co-performing together. Coherently with the concept of a prompting loop, AI is not only directing but also performing and actors are not only performing but also directing.

It is worth noting that the prompting loop also highlights the uniqueness and irreplaceability of the human onstage. A synchronic direction device where no actors were involved, or where actors were replaced by robots, would arguably have no singularity other than the audience element or unlikely external events. This would result in the AI prompting itself or some entities designed to repeat the same task mechanically, always in the same way. Without a human being onstage, the performance would soon lose its driving force, which ultimately lies in the unpredictability of the human.

The role of the human director in synchronic direction is left to discuss. It appears that, in a prompting loop, no place for a director is envisaged. Indeed, I argue that the role of the human director is mostly limited to the design and rehearsing phase and is of a different nature than the conventional one (i.e. the “omniscient creator” of the performance). To recall the analogy with Generative AI models, the human director acts in our case as a hybrid between a technologist, an engineer, and a supervisor. They are the figure who coordinates the apparatus setup, verifies its functioning and its interactions with the actors and set the limits for the performance staging. To prevent the performance from degenerating into a non-sensical improvisation, rules need in fact to be established, concerning for example the overall dramaturgical context or “topic”, the rhythm and state transition frequency, the allowed thresholds of sound and light effects, etc. Retrieving a definition often encountered in the history of theatre direction, the human director acts here as a clock-maker or as a “kind of a demiurge” (Artaud 1958). Nevertheless, the director has no standing in what happens onstage and what the audience is going to watch, since only the two elements of the prompting loop, the actors and AI, are co-directing and co-performing onstage.

Outro

In this essay, I attempted to frame theoretically the implementation of AI in theatre and to set the ground for practical experiments. My interest was to understand to what extent AI can become an active and directing entity in live performances. To push this possibility to the limit, I proposed to implement AI in the “hetero-direction” device and focused on one of the possible outcomes, which I called “synchronic direction”. Here, AI composes dramaturgy and instructs the actors in real-time: AI can indeed direct a performance actively. A closer look at this system helps to clarify the role of the human in the device and, overall, in live performances. Considering the prompting loop: (i) human unpredictability appears to be an unavoidable element of performances; (ii) creativity is preserved, if not enhanced; (iii) actors and AI co-improvise, co-direct and co-perform onstage; (iv) the human director represents a demiurge-like figure.

For clarity purposes, however, dramaturgical elements such as space, light and sound design were not considered and shall be included in further developments of this model. The role of improvisation, which appeared to underlie the human-AI onstage interaction, is also to be clarified. Moreover, given the technical complexity of this device, the next step would consist in ascertaining its feasibility and testing it with some initial experiments.

References

Akbar, Arifa. 2021. "Rise of the robo-drama: Young Vic creates new play using artificial intelligence." theguardian.com. 24 August. Accessed June 23, 2023. https://www.theguardian.com/stage/2021/aug/24/rise-of-the-robo-drama-young-vic-creates-new-play-using-artificial-intelligence.

Akten, Memo. 2021. Deep visual instruments: realtime continuous, meaningful human control over deep neural networks for creative expression. PhD thesis, Goldsmiths, University of London.

Artaud, Antonin. 1958. The Theatre and Its Double. Translated by Marie Caroline Richards. New York: Grove Press, Inc.

Befera, Luca, and Livio Bioglio. 2022. “Classifying Contemporary AI Applications in Intermedia Theatre: Overview and Analysis of Some Cases.” CREAI@AI*IA.

Di Bari, Francesca, et al. 2021. "A Journey of Theatrical Translation from Elena Ferrante's Neapolitan Novels: From Fanny & Alexander's No Awkward Questions on Their Part to Story of a Friendship (Including an Interview with Chiara Lagani)." MLN 136 (1).

Epstein, Ziv et al. 2023. "Art and the science of generative AI: A deeper dive." https://arxiv.org/abs/2306.04141.

Hertzmann, Aaron. 2018. “Can Computers Create Art?” Arts 7 (2): 18.

Margiotta, Salvatore. 2020. "La pratica dell’eterodirezione nel teatro di Fanny & Alexander." Acting Archives Review X (20).

Offert, Fabian. 2019. “What Could an Artificial Intelligence Theater Be?” Fabian Offert's Blog. 12 April. Accessed June 23, 2023. https://zentralwerkstatt.org/blog/theater.

Rosa, Rudolf, et al. 2020. "THEaiTRE: Artificial intelligence to write a theatre play." arXiv preprint arXiv 2006.14668.

Sigman, Alexander. 2019. "Robot Opera: Bridging the Anthropocentric and the Mechanized Eccentric." Computer Music Journal 43 (1): 21–37.

Footnotes

Source: https://www.heinergoebbels.com/works/stifters-dinge/4 (accessed June 23rd, 2023)↩

Source: https://culture.pl/en/event/robots-perform-lems-prince-ferrix-and-princess-crystal (accessed June 23rd, 2023) ↩

Source: https://www.theaitre.com/ (accessed June 23rd, 2023) ↩

Source: https://marcodonnarumma.com/works/corpus-nil/ (accessed June 23rd, 2023) ↩

Source: https://research.rhizomatiks.com/s/works/discrete_figures/en/ (accessed June 23rd, 2023) ↩

Source: https://www.youngvic.org/whats-on/ai (accessed June 23rd, 2023) ↩

Source: https://improbotics.org/ (accessed June 23rd, 2023)↩