This essay introduces the foundations for The Atlas of Dark Patterns, a practice based PhD research project focused on redefining perspectives on design for user consent in contemporary algorithmic interfaces through participatory reenactment performance. In it we first explore how ethical issues are conventionally defined in the field of Human Computer Interaction in terms of their involvement with extractivist practices of the attention economy. We then observe how behavioral design informs dark pattern implementation, weaponizing the physiological basis of cognition within the nervous system. We explore how The Atlas of Dark Patterns approaches the method of reenactment within participatory performance to provides contexts in which the affective space between users and their services can be enacted, revealing unconscious mechanisms behind the act of everyday user consent. Following the example of one of its case studies, The Terms of Service Fantasy Reader, we see how a new dark pattern emerged in the performances, expanding the landscape of dark patterns to include emotionally manipulative language weaponizing ethics of care. In order to situate such performance outputs, The Atlas of Dark Patterns forms as a proposal for a participatory resource for bottom-up mapping of (non) consent in user experience (UX) design as defined by lived expertise of end users.

Dark Patterns, Attention Economy, User Experience Design, Re-enactment, Performance, Consent, Microboundaries

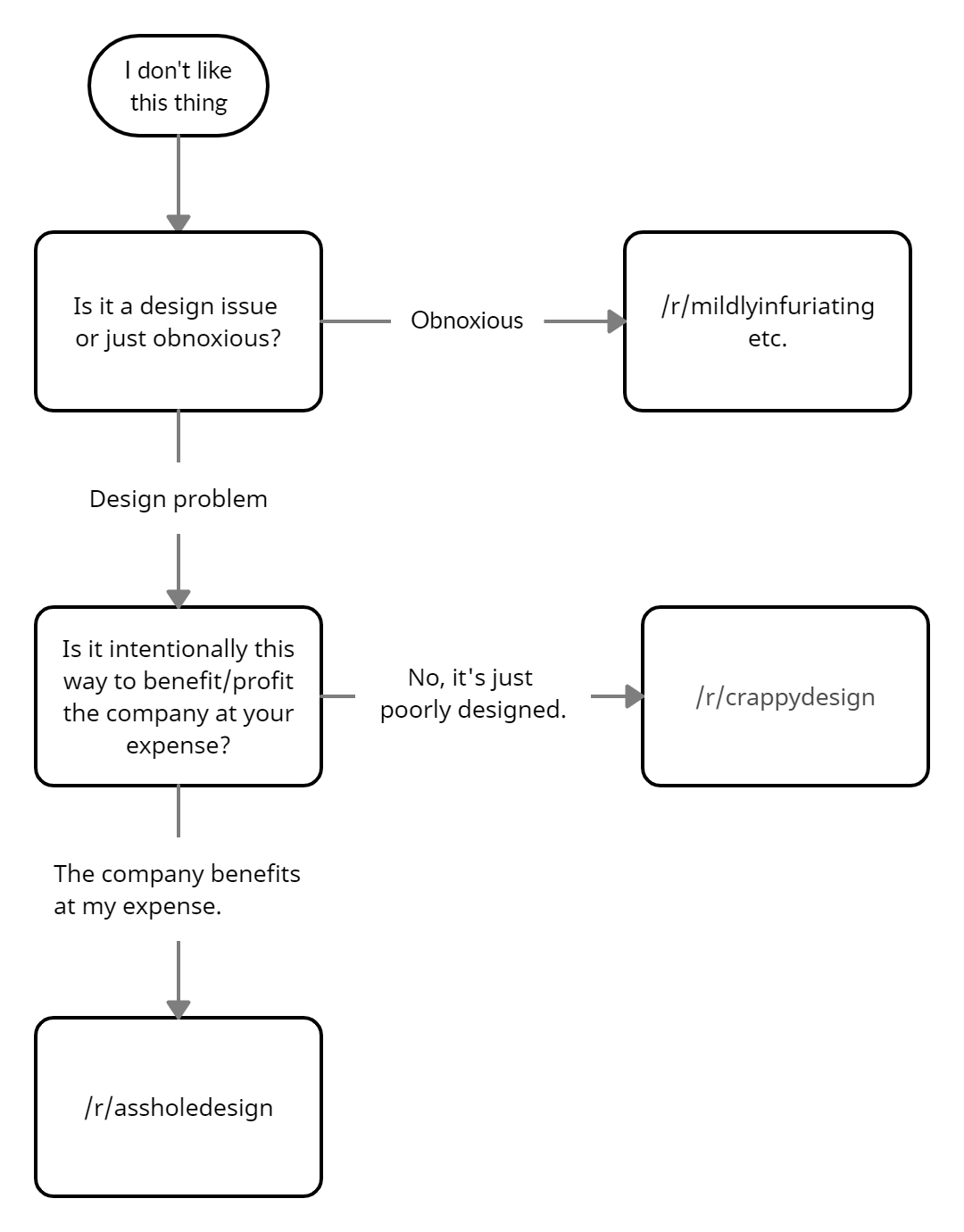

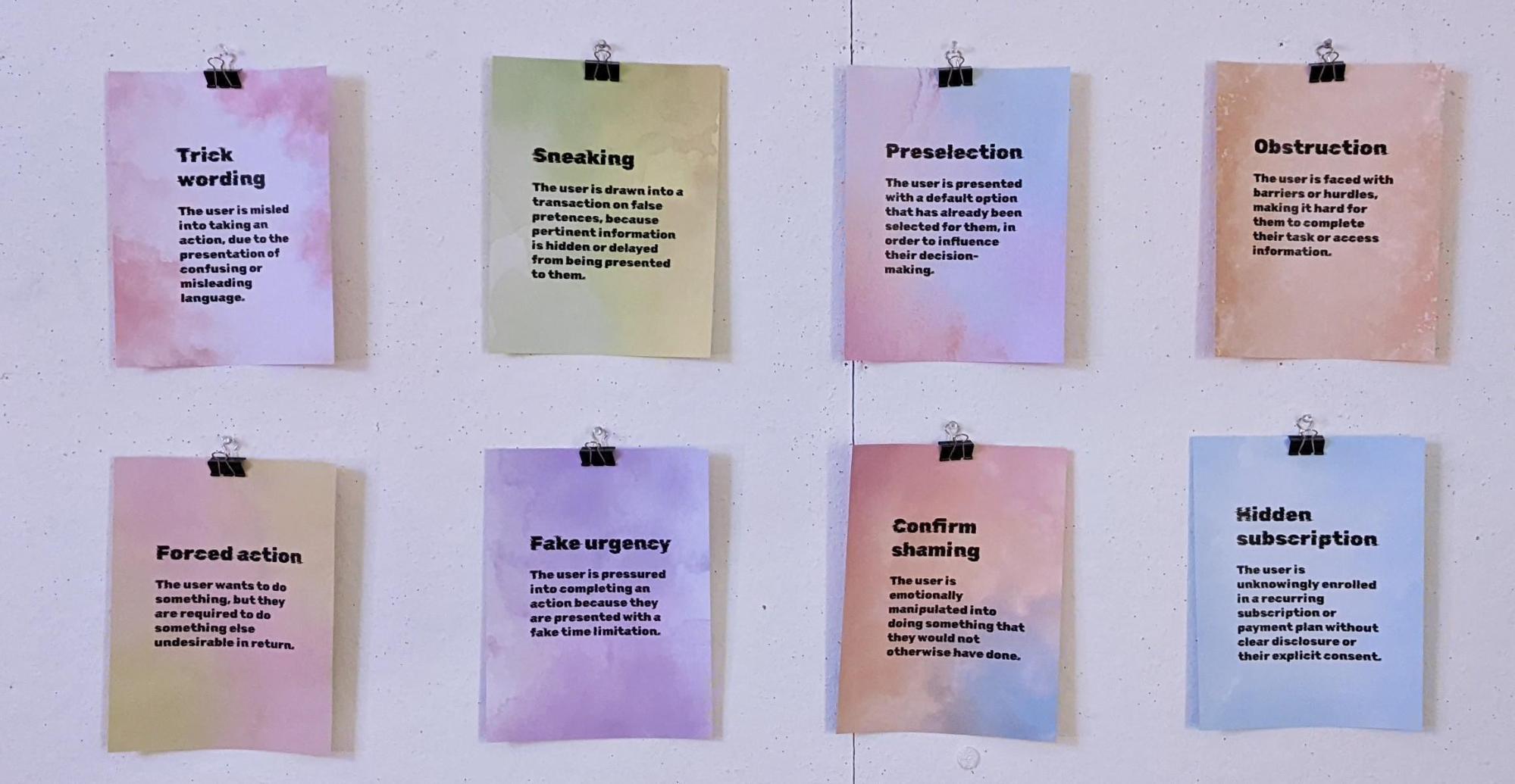

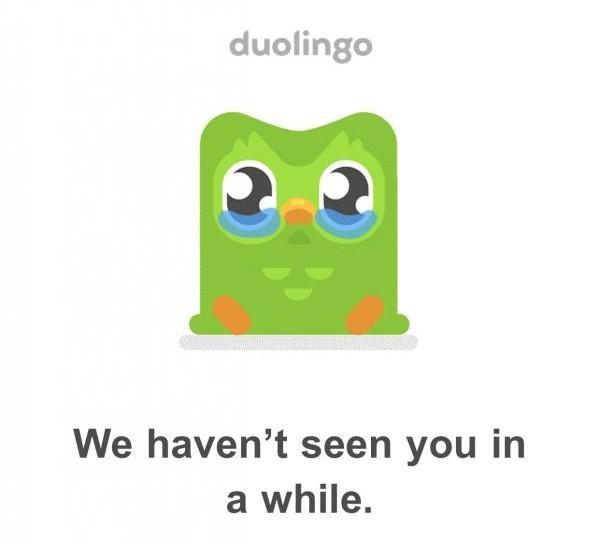

In everyday personalized user experience (UX) design, suggestive and persuasive messages as the one in the image above can commonly be seen blurring the experience of consent, choice and autonomy, leaving users feeling tricked or plainly bad for not acting in accordance with the default system settings. In the realm of Human-Computer Interaction, the academic home of UX design, examples of non-consensual design are analyzed through the concept of dark patterns – an umbrella term grouping various forms of deceptive design implemented towards a maximizing of profit (Narayanan et al. 2020; Bhoot et al. 2020; Gray et al. 2018) Originating from design practice, the term refers to commonly discussed examples of persuasive design1 which implement interaction models obstructing users’ perceived perspective of choice. UX designer Harry Brignull coined the term in 2010 as “carefully crafted with a solid understanding of human psychology, and they do not have the user’s interests in mind” (Brignull 2010). He initiated a categorization on the website deceptive.design (once called dark patterns) in which the defined types have been growing throughout the years2. Some examples of types include strategies for tricking a user into paying more than what was initially claimed through various methods such as or Hidden Subscription3 Sneaking4, Preselection5 and Hidden Costs6 A salient everyday example of these types in combination would be trying to book a cheap flight in which the booking process disorients the user through an overwhelming experience of suggestively including new expenses on every step of the way to purchasing one’s ticket. Some forms of dark patterns are noticeable from a usability perspective (such as Forced Action), while others are more subtle. Different people may experience these various types differently, which is why the diverse deceptive design practices are commonly used in combination.

What connects these UX models is that they are based on designed constraints which mask or disable existing options within a system so that the user is directed into an interaction model that monetarily benefits the service. In effect, dark patterns are a continuation of existing practices in product design such as crippleware, in which software or hardware features are deliberately disabled until, for the case of software, a user purchases an upgrade8. Dark patterns take these monetizing strategies a step further into constraining and actively nudging the user towards a preferred profitable path of interaction, using diverse strategies to create an appearance of a lack of alternative. What makes them patterns is that they have shown to be working strategies from a usability perspective to the extent that they have been adopted across different platforms. More importantly, what makes them dark is that they obscure both the user’s perception of choice and the awareness of manipulation taking place.

Increasing research on manipulative user experience design practices (Gray et al. 2018, Liguri et al. 2021) shows that there is an awareness and a growing space of ethical implications and regulations (Leiser and Santos 2023) around persuasive interaction design implementations. At the same time, the field of personalized interfaces is developing fast, showing a need to devise diverse forms of dark pattern detection and manipulation literacy (Lewis 2014). As a way of approaching the growing space of deceptive design, my research project focuses on the experience of end users in how they detect and relate to non-consensual interactions. In my doctoral thesis, I apply participatory action research principles, based on grounded theory, in which the participants taking part in the public performances shape the factors they are affected by as well as the ways they relate to these factors.

‘Extractivism’ is a term most often understood in relation to large-scale, profit-driven operations for the removal and processing of natural resources such as hydrocarbons, minerals, lumber, and other materials. In an extended sense, the term refers more generally to a mindset in which resources serve a means-ends function, becoming commodities to be extrapolated and turned to profit. (Parks 2021)

Extractivism within design is generally discussed in terms of cognitive and surveillance capitalism (Zuboff 2019), in which emotional and cognitive resources, personal memories and experiences are extracted for profit. In this space, human centered design based on empathy has been criticized as a form of exploitation and extraction of a person's emotional landscape for the creation of user personae, a generalized prototype of a user profile used to base design decisions on (Costanza-Chock 2020). Dark patterns however point to an additional type of extractivism, one of bodily physiological resources. Often functioning as active agents, they both influence and adapt to users' behavior – manifesting as interaction feedback by locking in behavioral options. Within this paradigm of design suggestive personalized services in effect distribute the act of consent between the interface and the user on the level of unconscious interaction. Such mechanisms in UX design connect to a long history of controlled experiments from models such as the Skinner box (or operant conditioning chamber), a laboratory environment created for the study of animal behavior.9 Already as early as the 1950’s findings from these types of experiments started consolidating with the development of predictive technologies10 into what today is called behavioral science.

In the field of user experience behavioral science is the foundation informing interaction models through behavioral design, a set of methods applying behavioral economy11 principles in order to learn from factors that influence people’s decision-making processes. Behavioral economy directly relies on how bodies learn, and therefore studies the physiological aspects of cognition and affect. It mainly targets the reptilian brain, the part of the nervous system which controls adrenaline and dopamine activators. The sympathetic nervous system12 is also key in this process, as its activation is strategically used for the purpose of maintaining user attention. To the user, this can appear as an amplification of very specific forms of attention and sensory pathways on the account of others, such as when one suddenly realizes they have been continuously scrolling catered content for the past several hours. One of the risks of such a behavioral practice is that through training the nervous system, types of interaction can be habituated into an almost automatic response, decreasing consciousness in relation to one’s behavior, and desensitizing empathic capacities. Subsequently, the sole ability to notice implementations of subtle and shifting forms of dark patterns can easily diminish through habitual repetition of nudged or, in other words, extracted consent. The implementation of behavioral design in effect weaponizes13 the resources of the nervous system as a form of bodily extractivism for behavioral manipulation.

Some of the first well elaborated critiques of adapting feedback mechanisms came as early as 1950, such as Norbert Wiener's The Human Use of Human Beings, in which he reflected on the ethical risks of implementing predictive machine learning systems, what he named in the book as an “inhuman use of human beings” (Wiener 1950).14 Today predictive technologies are ubiquitous and as machine learning algorithms advance, the use and extraction of human cognitive and affective resources improves, developing subtler forms of persuasion and targeted UX. Subsequently, the ethical issues surrounding consequences of data manipulation powered by behavioral design grow in parallel. One of such issues is the effect of a habitual normalization of supernormal stimuli,15 directly affecting people’s emotions and decision-making. This practice has been identified as what is called “hijacking the amygdala”16 , the tendencies of which we can see in dark pattern examples such as Fake Scarcity, which communicates an imagined lack of resources a user is interested in to elicit a fear of loss.

The Atlas of Dark Patterns explores the landscape of extractivist (Parks 2021) behavioral design of the user personae through studying the affective elements that shape the experience of automated personalization in particular around the affordances of contemporary dominant UX consent. Since the project aims to broaden the scope of factors that should be taken into account when approaching consent in design, it focuses on different people’s lived experiences, attitudes and tactics of consenting. The project doesn’t approach dark patterns from a solutionist mindset, but aims to diversify methods for providing end user perspectives. It is intended to build a body of experiential knowledge on addressing (un)consensual interaction design through collecting and displaying contributions from realized performances. By mapping phenomena that participants felt as non-consensual in the way they have taken place, the method allows approaching dark patterns and the act of consent as a spectrum rather than a binary (in the case of consent) or rigid classification (in the case of dark patterns).

What is particular to algorithmically driven systems such as those present in one’s smartphone is that they are in effect deeply behavioral, adapting and influencing agents designed to actively attempt constant interaction with the user they are customized for. Common everyday examples of such personalized software behavior are instances when one receives a notification about digital photo albums made from what the service assumes were travel/holiday photos made especially for and catered to them, or that their personal data has been automatically changed for them such as their date of birth suddenly being updated.

In my research I approach concepts such as agential assemblage in intra-action (Barad, 2007), understanding agency as that which is distributed between entities, 1) both human and non-human and 2) between the conscious, affective and unconscious. I approach this space of distributed agency from a behavioral standpoint, as an intended outcome of attention economy driven design, focusing on aspects in which instances of harmful habituation can occur. One of the ethical concerns this project revolves around is the effect of deceptive design normalization, meaning the acceptance and adoption of an absence of consent that is pervasive in attention economy driven17 interfaces as unproblematic through daily experience. In order to investigate the space of user interaction and behavior, I explore performance as an action based method for addressing practices around consent that are not always rational. Rather than placing participants into an interview or questionnaire setting as research subjects, collective performance allows them an active role as well as access to resources of affect as a way of resensitizing the attention resources of the nervous system. The performances are ad-hoc because they are done in various settings (festivals, conferences, exhibitions) and gather people who are in contact for the experience of the performance and contribution to the project but have most often never met before. They are collective because a group setting doesn’t put pressure and responsibility on an individual or isolated user to carry knowledge or provide all the questions/answers, but those become formed in group exchange in a supportive environment. The performance settings explore practices of enactment of various, mostly opaque, user experience conditions, giving these situations a voice and offering a shift of perspective that foregrounds how and what bodies themselves learn, through interaction and habituation. For that reason, The Atlas of Dark Patterns proposes collective participatory re-enactment performance18 as a critical practice method in order to explore the execution of mediated algorithmic behavior.

The Atlas of Dark Patterns builds from the output of several action-based research studies. One of them, The Terms of Service Fantasy Reader is an ad-hoc public rehearsal of dramatizing Terms of Service agreements as an inquiry into the opaque conditions of consent. Running as a public participatory performance, The Terms of Service Fantasy Reader is communicated as a designated space and time for the luxury of reading out the Terms of Service19 of various applications in use on participant’s personal devices, focusing on the least clear, misleading or for any other reason strange language found. The practice of a collective reading allows participants to effectively vocalize what they experienced as a felt tone of the language provided in a shared space. As each participant reads out their chosen segments, the contributions get recorded into a growing interactive online archive20 and web drama, accompanied by screenshots of suggestive app notifications.

Why explore Terms of Service specifically? Inherently non-consensual in the way they are constructed and implemented they can be seen as the legal backbone of the extractivist behavioral design paradigm. These legal difficult-to-read documents get "signed" on a daily basis and, when downloading and installing an app, their default settings are often unquestioned. With options to either accept all the conditions and participate in a world of affordances of platform selfhood, or decline and be left out of social circles, these formats effectively facilitate a consensus based on a lack of choice. In other words, their consenting mechanism can be perceived as a dark pattern in itself.

In the conducted instances of the performance, participants experienced being first in the role of a chosen Terms of Service agreement and then were offered a space of response from their position of end users. Through the various iterations of The Terms of Service Fantasy Reader and the practice of choosing and enacting segments, participants identified a pattern of persuasive language carrying an unusually emotional tone for what would be considered a legal document. Further detection of the types of emotional connotations located in the various examples showed that there is a narrative pattern of a weaponization of empathy for the purpose of persuasion. Such a pattern is present both in Terms of Service agreements and, more overtly, in diverse everyday app messages and notifications. These examples lie between the dark patterns of Confirmshaming and Trick Wording, exploiting ethics of care as a form of a surrogate relationality. For the purpose of The Atlas of Dark Patterns glossary, these subtle forms of deceptive design were named microtoxicities, to represent experiences of unease in consenting through various forms of external pressure accumulated over time.

The outcomes from the Terms of Service Fantasy Reader project raise the question of whether more subtle forms of uninformed consent are in fact ethically “darker” by being less transparent. They also suggest that these forms of microtoxicities carry the tendency of amplifying existing power asymmetries through harmful habituation. Moreover, what emerged from the enactment settings were participant expressions from the role of end users, showing responses in the form of personal microboundaries21(Cecchinato et al. 2015, Anna L. et al. 2016). What these findings show is that there is an existing need for a wider context and acknowledgment of lived user experience in approaching the topic of ethics and consent in user experience design. As one possible format towards that goal, The Atlas of Dark Patterns will be developed with the use of spatial concepts to gather the various written, visual, and vocal outputs of the participatory performances and reflect overlaps between different types of dark patterns from felt user experience, allowing a bottom-up fluid categorization of non-consensual design.

The resources that this project provides are a call to more public attention towards the nuance of ethical aspects of behavioral design practice around consent. By developing public participatory performances the project offers experiential means of detecting dark pattern instances. Subsequently, by mapping everyday tactics of approaching dark pattern landscapes as potential microboundaries, the Atlas is envisioned as a tool that can provide methods for supporting resilience in defining and obtaining informed consent within the current market-driven digital realm. Finally, a much broader question this research forms is what can informed and conscious consent look and feel like outside the constraints of the attention economy.

I would like to thank Luísa Ribas and Christopher Watters for their invaluable feedback on this text as it was forming.

Barad, Karen. 2007. Meeting the Universe Halfway: Quantum Physics and the Entanglement of Matter and Meaning. Durham and London: Duke University Press. https://doi.org/10.1215/9780822388128

Bhoot, Aditi M, Mayuri A. Shinde, and Wricha P. Mishra. 2020. “Towards the Identification of Dark Patterns: An Analysis Based on End-User Reactions”. In IndiaHCI '20: Proceedings of the 11th Indian Conference on Human-Computer Interaction (IndiaHCI 2020). Association for Computing Machinery, New York, NY, USA, 24–33. DOI:https://doi.org/10.1145/3429290.3429293

Brignull, Harry. 2010. “Dark Patterns: dirty tricks designers use to make people do

stuff.” Harry Brignull’s 90 Percent of Everything. https://90percentofeverything.com/2010/07/08/dark-patterns-dirty-tricks-designers-use-to-make-people-do-stuff/index.html accessed 23.06.2023.

Cecchinato, M.E., Cox, A.L., & Bird, J. 2015. “Working 9-5? Professional Differences in Email and Boundary Management Practices”. Proceedings of the SIGCHI Conference on Human Factors in Computing systems. Seoul: South Korea.

Costanza-Chock, Sasha. 2020. Design Justice: Community-led Practices to Build the Worlds We Need. Cambridge, Massachusetts, The MIT Press.

Cox L, Anna, Sandy J.J. Gould, Marta E. Cecchinato, Ioanna Iacovides, and Ian Renfree, 2016. “Design Frictions for Mindful Interactions: The Case for Microboundaries.” In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA '16). Association for Computing Machinery, New York, NY, USA, 1389–1397. DOI:https://doi.org/10.1145/2851581.2892410

Fitzduff, Mari. 2021. “The Amygdala Hijack”, Our Brains at War: The Neuroscience of Conflict and Peacebuilding, https://doi.org/10.1093/oso/9780197512654.003.0003, accessed 26 June 2023.

Gray, Colin M, Yubo Kou, Bryan Battles, Joseph Hoggatt, and Austin L. Toombs. 2018. “The Dark (Patterns) Side of UX Design.” In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). Association for Computing Machinery, New York, NY, USA, Paper 534, 1–14. https://doi.org/10.1145/3173574.3174108

Kropotov, D. Juri. 2009. “Chapter 13 - Affective System”, Quantitative EEG, Event-Related Potentials and Neurotherapy, Academic Press, 292-309

Leiser, Dr Mark and Santos, Dr Cristiana, 2023. “Dark Patterns, Enforcement, and the emerging Digital Design Acquis: Manipulation beneath the Interface”. Social Science Research Network. https://ssrn.com/abstract=4431048

Lewis, Christopher. 2014. “Understanding Motivational Dark Patterns.” In Irresistible Apps. Apress, Berkeley, CA: https://doi.org/10.1007/978-1-4302-6422-4_8

Narayanan, Arvind, Arunesh Mathur, Marshini Chetty, and Mihir Kshirsagar. 2020. “Dark Patterns: Past, Present, and Future: The evolution of tricky user interfaces”. Queue 18, 2, Pages 10 (March-April 2020), 26 pages. DOI:https://doi.org/10.1145/3400899.3400901

Parks, Justin. 2021. “The poetics of extractivism and the politics of visibility.” Textual Practice, 35:3, 353-362, DOI: 10.1080/0950236X.2021.1886708

Raymond, S. Eric. 1996. The New Hacker's Dictionary, third edition, MIT Press Academic

Redström, Johan. 2006. “Persuasive Design: Fringes and Foundations”. In Persuasive Technology. 112–122. DOI:https://doi.org/10.1007/11755494{_}17

Simon, Herbert A. 1971. “Designing Organizations for an Information-rich World.” Computers, communications, and the public interest. Baltimore, MD: Johns Hopkins University Press. pp. 37–52.

Tinbergen, Niko. 1951. The Study of Instinct. Oxford, Clarendon Press. ISBN 978-0-19-857343-2

Wiener, Norbert. 1950. The Human Use of Human Beings: Cybernetics And Society. New edition. New York, N.Y: Da Capo Press

Zuboff, Shoshana. 2019. The Age of Surveillance Capitalism. London, England: Profile Books.

While design can be understood as inherently persuasive (Redström. 2006.), persuasive technology is defined broadly as “any interactive computing system designed to change people’s attitudes or behaviors” (Fogg, 2003, p. 1).↩

At the time of writing, the categorization on the website includes 16 defined types.↩

“The user is unknowingly enrolled in a recurring subscription or payment plan without clear disclosure or their explicit consent.” https://www.deceptive.design/types/hidden-subscription, accessed 23.06.2023.↩

“The user is drawn into a transaction on false pretences, because pertinent information is hidden or delayed from being presented to them.” https://www.deceptive.design/types/sneaking, accessed 23.06.2023.↩

“The user is presented with a default option that has already been selected for them, in order to influence their decision-making.” https://www.deceptive.design/types/preselection, accessed 23.06.2023.↩

“The user is enticed with a low advertised price. After investing time and effort, they discover unexpected fees and charges when they reach the checkout.” https://www.deceptive.design/types/hidden-costs, accessed 23.06.2023.↩

https://www.reddit.com/r/assholedesign/, accessed May 2022, now private.↩

In the case of hardware, the disabled hardware means that users need to buy extra parts for existing but disabled elements. See The New Hacker Dictionary (Raymond 1996)↩

A laboratory apparatus developed by B. F. Skinner in operant conditioning experiments to study animal behavior, which typically contains a lever that delivers reinforcement in the form of food or water upon being pressed.↩

See more in Jill Lepore’s book IF:THEN, How Simulmatics Invented the Future.↩

“Behavioral economics is a discipline examining how emotional, social and other factors affect human decision-making, which is not always rational.“ source: https://www.interaction-design.org/literature/topics/behavioral-economics accessed 23.06.2023.↩

The part of the nervous system which activates the body’s“fight-or-flight” response.↩

In this particular context weaponization refers to the way in which neurotransmitter flows become potential weapons for directing user interaction, intentionally circumventing conscious choice as a form of subconscious manipulation↩

Making a parallel to fascist societies he pointed to a risk of the loss of freedom and increase of censorship in a fully automated society, due to habitual reinforcement of roles, functions and acts, as well as an exploitation of human resources for increasing profit of factory owners↩

A sensory stimulus the concentration of which is of an intensity not found in a natural habitat (Timbergen 1951), such as fast food, porn or in the context of user experience, interface design tactics such as the infinite scroll or targeted anger engagement on services such as YouTube.↩

The amygdala is “a structure detecting threat or potential punishment and thus generating negative emotions such as fear and anxiety” (Kropotov 2009). An amygdala or emotional hijack is an immediate, overexaggerated response out of affect, triggered by a perceived threat (Goleman, 1996) an example of which is violent and offensive reactive behavior such as what happens in some online arguments called flame wars for their flammable nature of disagreement and affect escalation, which can lead to physical aggression, war mobilization, etc. (Fitzduff 2021).↩

An approach of treating attention as a resource and applying principles of economics for the purpose of attention management, first laid out by psychologist and economist Herbert A. Simon (Simon 1971).↩

The performances apply methods from socio psychodrama, an action based group therapy modality based on the theory of roles, spontaneity and surplus reality, opening the space to explore the narrative that these interface interactions create between service and user in a collective setting.↩

Terms of Service agreements are legal documents that define the grounds upon which someone can engage with a certain service, but they are conceptually demanding, long, and often hermetic to read. At the same time, they are one of the main battlegrounds for user rights.↩

Source: https://termsofservicefantasyreader.com/act-2/ accessed 23.06.2023.↩

Practices by users done to limit the negative effects of intrusive digital experiences.↩